posted 07-31-2009 05:46 AM

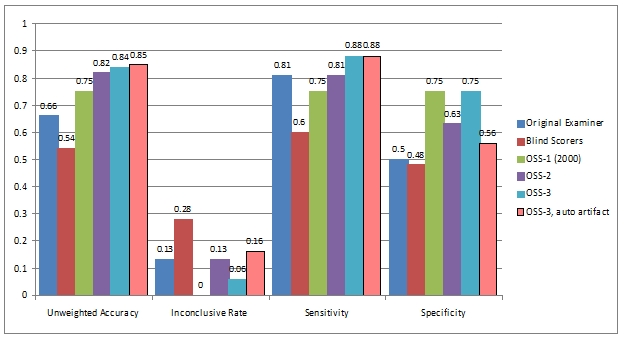

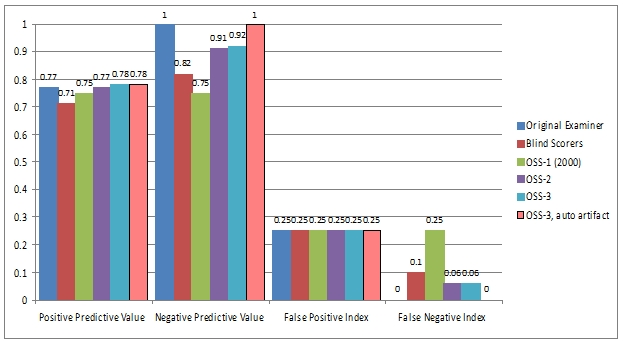

The results shown by the lighter blue bars, second to the right in each group, are the same OSS-3 version as always.OSS-3 is performing as expected with the Army cases.

The results shown by the pukey-salmon colored bars, on the right end of each group, were obtained using OSS-3 after replacing the Test of Proportions with the results of another project, intended to automate the detection of artifacts.

In this experiment, the artifact rejection algorithm was pointed only at the pneumos. The artifact rejection method uses an transformation method that I first used in the RI algorithm project, and later realized could be coupled with the test of proportions.

If you look at the .pdf link you'll notice the algorithm doesn't agree with us on every detail, but correctly classifies both the non-CM case and the CMs. At present, at present if the data are messy and widely varied, the normal looking segments may be regarded as the mathematical outliers.

Until we are sure that an an algorithm can ID data quality and artifacts as reliably or more reliably then a human, the ethical and responsible thing to do is not expect the algorithm to make the decision. Instead, we make the decision, and perhaps let the algorithm do the math and give us the level of significance.

Same with artifacts, let the algorithm do the math, and tell us the level of significance regarding 2 things: 1) whether any of the measured values are outside normal limits compared to the others, and 2) whether the artifact events fit a pattern of activity that we would expect if they occurred due to random chance alone. Just like the manual Test of Proportions in the present OSS-3, when the p-value is significant for non-random activity, that becomes a mathematical basis for concluding the activity was systematic. The only thing new is a method for comparing the measurements themselves and determining whether any of them appear to be outlier values indicative of artifact events.

There is obviously a lot remaining to be done. This is a project that has been on a slow trajectory for nearly two years. The results is about a 1 percentage point in decision accuracy. The cost of this is a slight increase in INCs, as some cases won't be scored due to artifacts.

The difference itself will not be statistically significant. However, even non-significant incremental improvements, if we stack up enough of them, may become important.

r

------------------

"Gentlemen, you can't fight in here. This is the war room."

--(Stanley Kubrick/Peter Sellers - Dr. Strangelove, 1964)

Polygraph Place Bulletin Board

Polygraph Place Bulletin Board

Professional Issues - Private Forum for Examiners ONLY

Professional Issues - Private Forum for Examiners ONLY

OSS-3 Army MGQT validation data - APA poster

OSS-3 Army MGQT validation data - APA poster